Exploring the Myth: Can ChatGPT Actually Be Detected in Your Writing?

Table of Contents

Artificial intelligence has slipped into nearly every corner of how we read and write. It feels exciting at times, and at other times unsettling. ChatGPT is the one people talk about most. It can shape essays, draft reports, or assemble clean summaries that sound eerily close to what a real person might type out late at night. But a question still hangs in the air. Can we really tell when the words came from ChatGPT?

There is no simple yes or no. Some insist the line between human and machine writing has already disappeared. Others argue you can still spot the clues if you know where to look. The truth lies somewhere in the middle. To even begin answering, it helps to see how ChatGPT strings sentences together.

How ChatGPT Generates Text?

ChatGPT doesn’t think the way we do. It predicts. Every single word is a guess about the most likely next step. That guessing comes from patterns it has absorbed across tons of data. The result is often smooth, sometimes too smooth. It reads well enough, but can slip into a kind of mechanical rhythm. It’s wild, really.

I once looked at a student’s essay. Normally, their work had rough spots, missed commas, and uneven pacing. But this essay glided as if it had been polished by an editor. That raised suspicion. Human writing rarely stays perfectly even. We pause. We take detours. We repeat ourselves. ChatGPT often forgets that, and its steady rhythm becomes a tell.

Think of a business report. Perfect graphs. Balanced paragraphs. Everything lined up. Impressive at first glance. Yet something feels missing. The minor quirks, the lived experience, the sudden change of tone. That absence is what makes people ask whether a machine had a hand in it.

Why Detecting Matters?

The reason detection matters depends on where the writing shows up.

In classrooms, the concern is learning itself. If a student submits an assignment written entirely by ChatGPT, the whole purpose of practice and struggle is lost. Professors describe it as catching plagiarism in a new disguise.

In offices, the stakes are trust. A consultant leaning only on AI may hand in something that looks fine, but empties the client relationship of credibility. Publishers face the same risk. Accuracy and originality can erode when there’s no way to separate machine polish from human thought.

Detection isn’t about punishing the use of new tools. It’s about holding on to honesty and keeping the human effort visible.

What does this mean for Students and Professionals?

The consequences are not small. For students, being accused of using AI even wrongly can feel like a gut-punching moment. A failing grade is bad enough, but the real loss is credibility.

For professionals, the problem is quieter but still damaging. I remember a policy memo that looked perfect on the surface, facts correct, structure flawless. Yet it lacked the human touch, the messy insights that come from real work. That’s the weakness of leaning too hard on AI. The report may be correct, but it feels like anyone could have written it.

The discussion shouldn’t be framed as “ban AI” or “let it take over.” Balance is the key. AI can support, but it should not replace the effort that defines genuine work.

How Detection Tools Work in Practice

The rise of AI writing has given birth to its own industry of detectors. They scan, crunch numbers, and return a judgment: likely human or likely machine. These systems rely heavily on natural language processing and semantic analysis. Results are far from perfect, but they do shed light.

Take ZeroGPT, It looks at patterns and flow, then gives you a call. It works decently in some cases. But check Reddit threads and you’ll find plenty of examples where it misfires. I’ve seen the same. A decent pointer, not the final answer.

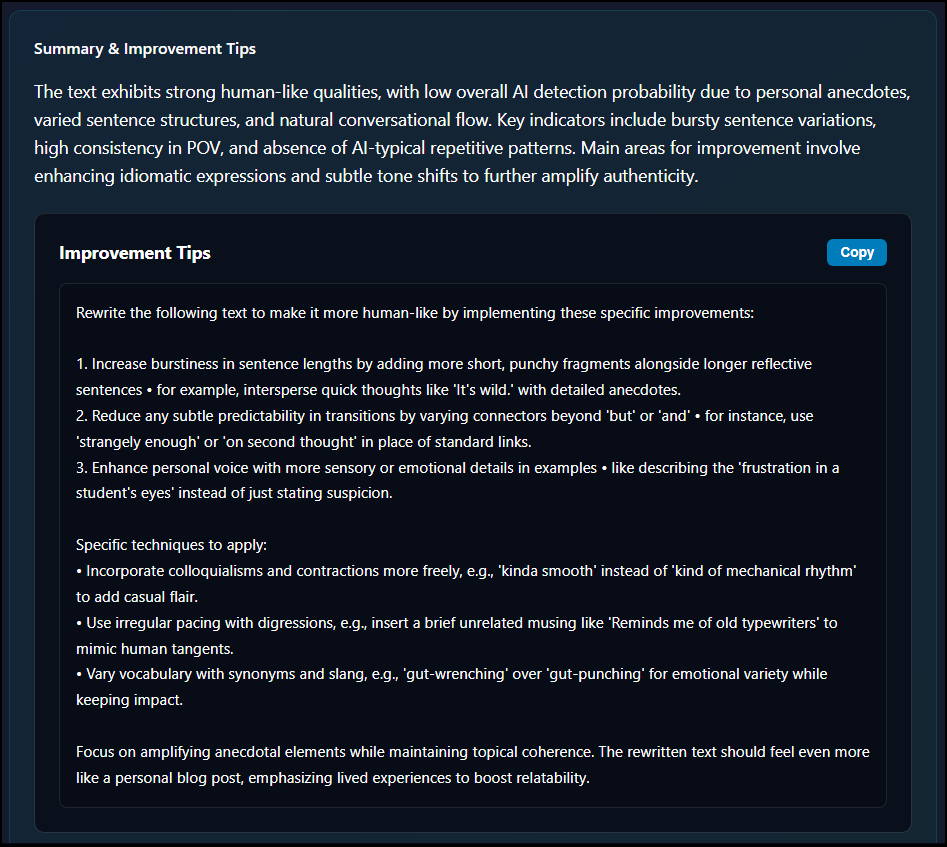

Then there’s GPTDetector. It doesn’t just drop a score. It explains why a passage feels mechanical and even offers ways to adjust it. That feedback turns the process into a learning tool. Helpful for students. Practical for professionals.

Beneath all this are the core methods. NLP checks sentence rhythm and word choice. Semantic analysis looks at meaning. Some even compare against plagiarism software, though that’s a different task, plagiarism checks for copying. AI detectors search for fingerprints of a system that writes too neatly.

Can You Track ChatGPT Directly

This is where the answer gets technical. ChatGPT leaves no hidden tag behind. No watermark, you can pull up later. Researchers are exploring watermarking methods, but they remain experimental. And if a human edits the AI text, the signals almost disappear.

So the real answer is no. You can’t trace a block of text back to ChatGPT with certainty. What you can do is weigh probabilities, look for suspicious consistency, and build a case from there. That’s why most detectors speak in terms of “likely” rather than “proven.”

The Fairness Question

Detection is powerful but imperfect. A false positive can unfairly damage a student’s future. A false negative lets machine-generated text sneak by. Both outcomes carry weight.

This is why educators and employers need to tread carefully. A tool should never be the only judge. Human review has to stay part of the process. Used wisely, detection can guide decisions. Used blindly, it can ruin trust.

Looking Ahead

AI will only get sharper. Each new model writes with more variety and fewer obvious tells. Detection will have to keep adapting. Future systems may lean on deeper semantic analysis, smarter NLP, and perhaps watermarking designed directly into the models.

For now, platforms like GPTDetector and ZeroGPT are valuable. GPTDetector even gives clear analysis, offers guidance, and reminds us that technology doesn’t replace judgment.

So can ChatGPT be detected? Not with complete certainty. But with careful tools, trained readers, and a commitment to fairness, the gap can be narrowed. And that may be enough to keep originality alive in a world where machines are learning to sound more and more like us.